The Rise of Deepfakes and the Erosion of Trust: How AI-Generated Content is Shattering Online Communities

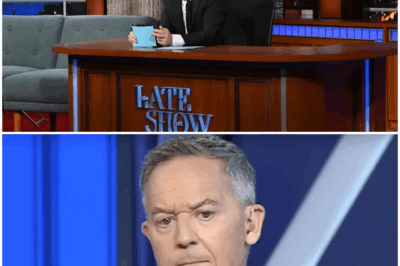

In January 2023, a 51-second clip of Stephen Colbert went viral. The Late Show host appeared shaken, his voice trembling as he seemingly denounced free speech. Social media exploded—Colbert’s supporters turned against him, Democrats argued in private forums, and accusations flew. Three days later, the truth emerged: the clip was fake. A deepfake.

This wasn’t just a doctored video—it was a meticulously crafted AI-generated forgery, designed to split a community that once rallied behind him. And it worked. The damage? Over **2,000 shares, seven heated arguments, and a chilling realization: the person they thought they were debating never existed.**

Welcome to the **age of synthetic reality**, where trust is the first casualty.

—

The Anatomy of a Deepfake Attack

Deepfakes are AI-generated media—images, audio, or video—that manipulate reality with alarming accuracy. Powered by **Generative Adversarial Networks (GANs)**, these models train on hundreds of hours of footage to replicate facial expressions, voice, and mannerisms.

How the Colbert Deepfake Spread

1. Seeding the Clip: It first appeared on **X** (formerly Twitter), then spread rapidly through Facebook groups and Reddit.

2. Bot Amplification: Over **200 fake accounts** boosted engagement within 90 minutes, many linked to past misinformation campaigns.

3. Psychological Hooks: The clip preyed on existing frustrations—free speech debates, political fatigue, distrust in media.

4. Self-Destruction: Once the lie was exposed, moderators scrubbed posts, but the **emotional damage remained**.

Researchers at CrowdTangent, a misinformation watchdog, traced the IPs to servers in **Frankfurt and Romania**, used in earlier disinformation campaigns.

Why This Deepfake Was Different

Most deepfakes target **celebrities or politicians**—think fake Joe Biden robocalls or Tom Cruise TikTok skits. But this was **more sinister**:

– No obvious political agenda: It didn’t push Republican talking points; it turned Democrats against each other.

– Weaponized nostalgia: Colbert was a unifying figure during the Trump era—attacking him weakened collective trust.

– Near-undetectable flaws: The video was **98% convincing**, with only subtle errors (glare mismatches, audio sync issues) giving it away.

—

From Viral Lies to Real-World Consequences

1. Social Fracturing

The clip didn’t just spark arguments—**it rewired relationships**. Friends unfriended each other. Group chats dissolved. A moderator admitted:

> *”We were debating Stephen. But Stephen wasn’t even there.”*

2. Legitimizing Misinformation

Before debunking, the clip:

– Was cited by **7 “news” outlets** as “proof” of Colbert’s “hypocrisy.”

– Got **four Reddit users banned** for defending him—**before the truth came out**.

– Racked up **2.7 million impressions**—most from users who never saw the correction.

3. The Silence of the Real Colbert

Colbert **never acknowledged** the deepfake. Was it strategy, exhaustion, or resignation?

– If he responded, conspiracy theorists would claim **he was covering up**.

– Staying silent meant **letting the lie fade**—but also **letting distrust linger**.

—

The Bigger Problem: Who Controls Reality?

Deepfakes are becoming **cheaper, faster, and harder to detect**. By 2025, **90% of online content could be AI-generated**. The implications?

1. Death of Eyewitness Reliability

– Courts rely on video evidence. What happens when **any footage can be fake?**

– Police bodycams, security tapes, and whistleblower leaks could all be **manufactured**.

2. The End of Consensus Reality

If both sides can claim videos are **fake**, how do we agree on facts?

– **Political extremists** will dismiss unfavorable recordings as deepfakes.

– **Gaslighting at scale**: Imagine a leader denying their own speech, claiming AI tampering.

3. Erosion of Trust in Institutions

– **Journalism**: If news outlets unknowingly spread deepfakes, credibility collapses.

– **Science**: Climate data, medical research—what if falsified studies go viral?

– **Democracy**: Elections hinge on public trust. Deepfakes could **sway votes silently**.

—

Fighting Back: Can We Detect (and Defeat) Deepfakes?

Technical Solutions

1. Blockchain Verification

– Attach digital watermarks to authenticate real footage.

– Microsoft’s **Video Authenticator** analyzes pixels for AI tampering.

2. AI Detection Tools

– OpenAI’s **Deepfake Detector** spots inconsistencies in facial movements.

– **Intel’s FakeCatcher** checks blood flow in video pixels (real faces pulse slightly).

Policy & Regulation

– **Bans on Malicious Deepfakes**: The U.S. and EU now penalize politically harmful fakes.

– **Platform Accountability**: Should Twitter/X and Facebook flag suspected AI content?

Media Literacy: The Best Defense

1. **Reverse Image Search**: Tools like **Google Lens** trace origins.

2. **Audio Analysis**: Apps like **Audacity** reveal unnatural voice patterns.

3. **Emotional Resilience**

– **Pause before sharing**. Ask: *Who benefits if I believe this?*

– **Check sources**. If only sketchy sites report it, suspect manipulation.

– **Defaults matter**. Assume viral clips *could* be fake until verified.

—

A Warning from History: The Firehose of Falsehood

In 2014, Russian operatives pioneered **”The Firehose of Falsehood”**—flooding social media with so many lies that truth drowned. Today, **AI is the firehose’s turbocharger**—it automates deception at scale.

The Colbert deepfake was a **beta test**. Future attacks could:

– **Fabricate riots** to justify crackdowns.

– **Sabotage stock markets** with fake CEO resignations.

– **Spark diplomatic crises** using manipulated leader speeches.

—

Conclusion: How Do We Survive the Post-Truth Era?

Deepfakes won’t disappear. The only solution is **rebuilding trust from the ground up**:

1. **Demand Transparency**

– Governments, tech giants, and media must **prove authenticity**—or lose credibility.

2. **Reward Critical Thinking**

– Fact-check before reacting. Reward those who debunk, not just those who outrage.

3. **Protect Human Connection**

– The more isolated we are online, the easier deception spreads. **Talk face-to-face**.

The Colbert case proved **one unshakable truth**: when we turn on each other, **we play into the deceiver’s hands**.

The next deepfake won’t ask permission—it’ll already be in your feed. The question is: **Will you recognize it before it divides you?**

News

Sit Down, Outdated Barbie”: How Whoopi Goldberg’s Silence Exposed a Generational Clash on Women’s Stories

Sit Down, Outdated Barbie”: How Whoopi Goldberg’s Silence Exposed a Generational Clash on Women’s Stories For seven seconds, a studio…

The Power of Silence: Stephen Colbert’s Masterclass in Narrative Control

The Power of Silence: Stephen Colbert’s Masterclass in Narrative Control In the fast-paced world of late-night television, where humor and…

The Unveiling of Secrets: David Letterman’s Tapes and the CBS Controversy

The Unveiling of Secrets: David Letterman’s Tapes and the CBS Controversy In the world of late-night television, few figures have…

Molly Gordon Talks Theater Camp Chaos and Her Rom-Com That Took a Left Turn

Molly Gordon Talks Theater Camp Chaos and Her Rom-Com That Took a Left Turn In the vibrant world of entertainment,…

The Magical World of Harry Potter: A Deep Dive into Spoilers and Revelations

The Magical World of Harry Potter: A Deep Dive into Spoilers and Revelations The Harry Potter series, penned by J.K….

Black Man Helps a Stranger Through a Brutal Snowy Night, The Next Morning, His Life Changes Forever

Black Man Helps a Stranger Through a Brutal Snowy Night, The Next Morning, His Life Changes Forever On a brutal…

End of content

No more pages to load